Troubleshooting

Machines won't launch

When you have submitted your jobs successfully but don’t see any machines launch, this is usually due to the virtual CPU (vCPU) limit set in your AWS account. AWS (and other cloud providers) sets limits on the number of machines you can run by default, and these limits are calculated by vCPU. Remember that a single machine instance has multiple vCPUs. So if you need, for example, 10 c5.4xlarge machines, you need to make sure your vCPU limit is at least 160 vCPUs (16 vCPUs per c5.4xlarge instance). Also remember that these limits are per account -- so if you have a 1000 vCPU limit on your account, then that limit will dictate how many machines Hybrik and every other service you have running in your account can use in total. If you are running other processes that take up some of those vCPU's, then fewer will be available for Hybrik.

Note that you have to request a specific number of vCPUs for a given region. So, if you need to be running large numbers of instances in both us-east-1 and eu-west-1, then you will need to request high vCPU values for each region. You can learn more about this in the AWS vCPU Service Limits tutorial.

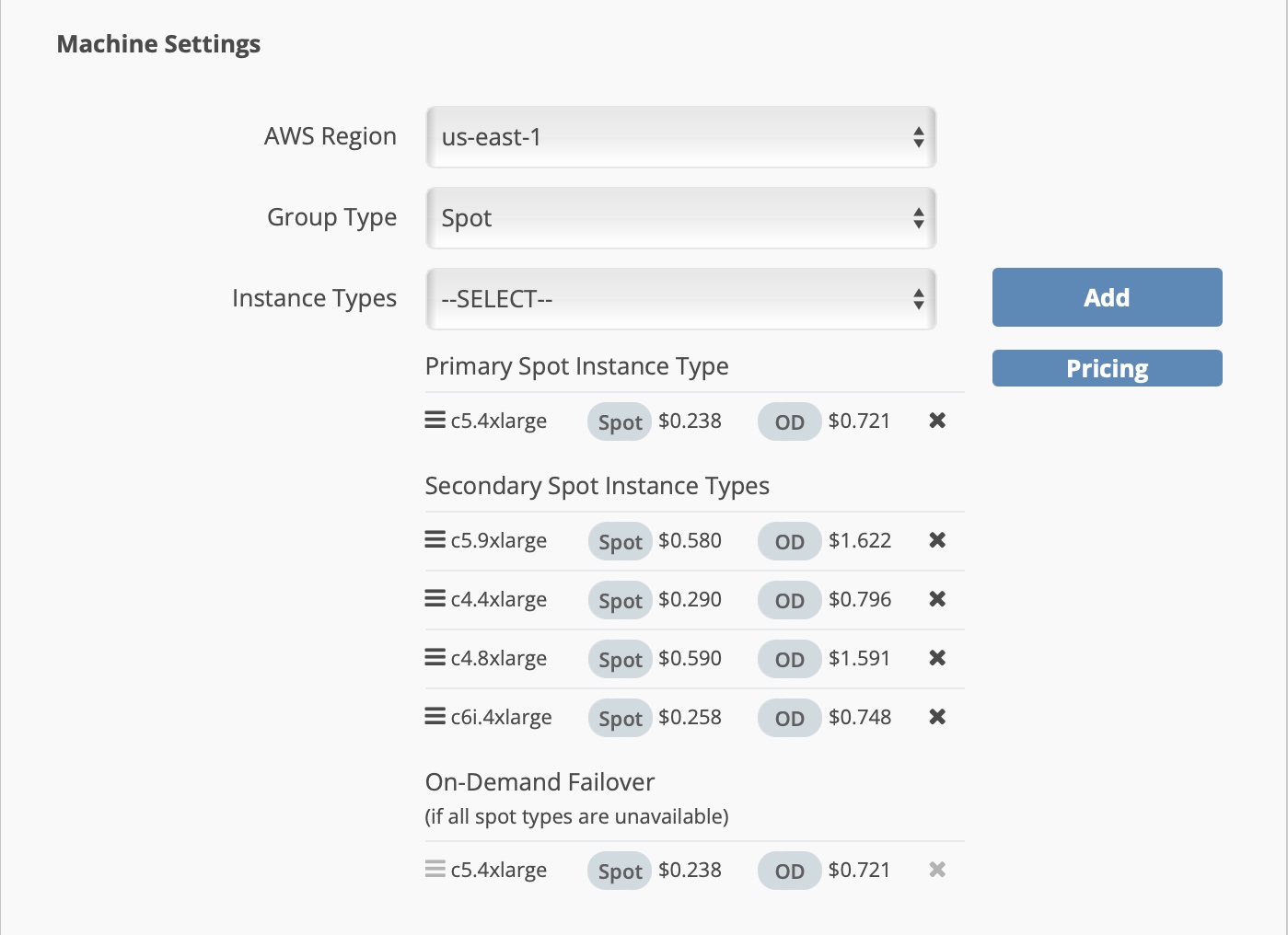

Another possibility is that AWS is over capacity for the spot instance types selected in your computing group. We recommend adding multiple secondary spot instance types to your computing group configuration; if we detect that the primary instance type is unavailable, Hybrik will request instances from the list of secondary instance types. If none of the instance types selected can be launched and your computing group is set to "On-Demand Failover", then Hybrik will automatically switch to on-demand machines. If "On-Demand Failover" is not set, then Hybrik will not be able to launch machines until the spot market is available.

Error: "machine id was terminated"

"result_define": "SYS_TSK_MACHINE_TERMINATED",

"message": "machine id 1476067(i-0f004775272cbbf08) was terminated",

This error means that a spot instance was taken away by the cloud provider. You can control Hybrik's retry behaviour in this scenario. The default is to retry, but if a job exhausts allowed retries this will be a terminal error (typically on long-running jobs in periods of high volatility).

Suggestions

- Ensure that your computing group is configured with multiple secondary spot instance types; this allows Hybrik to request different instance types if the primary spot instance type is unavailable or has a high rate of terminations.

- Increase the retry count

- On long-running jobs (multi-hour), switch to on-demand instances.

"task": {

"retry_method": "retry",

"retry": {

"count": 1,

"delay_sec": 30

}

},

S3 access error — "cannot access file (403 Forbidden)"

This error message describes the information Hybrik is getting back from AWS S3. The IAM user may have full S3 permissions, but there can be a couple of other places where permissions need to be assigned:

- If this bucket is owned in another account, permission needs to be added to the IAM user in your Hybrik account to grant access from the owning account

- There could be a S3 bucket policy on the S3 bucket which could deny access

- If there is a “deny” permission or a permission boundary that is set, this could be a reason for a denial

- The objects themselves could have deny permissions associated with them

- There could be an ACL policy denying access to the bucket or object

The easiest way to know if Hybrik has access to the files is to go to the Hybrik web console, and navigate to the Storage Browser. Navigate to the files with your Hybrik user and try to start a download. If the download starts, Hybrik has access. If the download does not start and you get an “access denied” message, Hybrik does have access.

Also note that you can have multiple IAM users in your Hybrik Credentials Vault. You might be using one set of credentials to launch machines, but you want another set of credentials to be used to access the data. You can reference these other credentials in the Location object describing your source or destination.

Error: "task invalid, all input documents filtered away"

Example error:

"result_define": "SYS_TSK_CONFIG_ERROR",

"message": "task invalid, all input documents filtered away: 20205853(0)",

This happens whenever a Hybrik task has no inputs passed to it. Typically this is when a task fails and on 'failure', goes to a notification task. When a task fails, the internal "document" or record that Hybrik keeps between tasks turns into an error message and no longer has a notion of inputs or outputs. That is why you can add definitions to notification tasks but you cannot use {source_basename} etc in failed notification tasks.

true_peak value set in DPLC analyze

Occasionally the true_peak set in the Dolby Pofessional Loudness Correction (DPLC) analyze task may not be honored as expected in the output file. For example, in an hour long source with relatively quiet sound throughout but a brief (1 second) period of very loud sound (i.e. gunfire), the true_peak may be higher than what was set to accommodate the very brief but very large different in levels.

The effects of loudness limiting on the shape of an audio waveform cause anomalies in certain cases when true peak limiting is in force in a file-based workflow. Dolby Pofessional Loudness Correction lets you specify whether to use true or sample peak as the basis for loudness limiting. The professional limiter uses a high-quality algorithm designed for broadcast quality control and content-creation applications. Nonetheless, the output will, on rare occasions, slightly exceed the true peak limit. This effect is due to the fact that limiting itself changes the shape of the waveform in such a way as to raise true peaks in certain cases. To compensate, DPLC applies an adjustment factor to the whole program; any remaining anomalies will be irrelevant after perceptual encoding

If you have an absolute hard stop, you can adjust the true_peak value down in the analyze task in order to get the desired result. Example, if you're expecting a true peak of -1 or lower, but are getting higher than -1 in the output, you can set the true peak to -2 (or lower).

Video and Audio target files have different duration when there is a framerate change.

When there is framerate change between source and target, the audio duration in audio file may end up different than video duration in video file, and the video/audio would be out of sync on playback of packaged OTT assets.

To resolve this issue, you may apply a speed change filter to all audio target containers such as below (the example is to change frame rate of 24 fps in the source to 23.98 fps in the video target):

"container": {

"kind": "fmp4",

"segment_duration": 5,

"filters": [

{

"kind": "speed_change",

"payload": {

"factor": "(24000/1001)/24",

"pitch_correction": true

}

}

]

},

Bif task: filenames of images input to a bif creator must be incremental numbers

BIF file < 100 byte, invalid

A sequence of thumbnail JPEG files can be used as sources for a bif creator. We only use incremental numbers (without an letters before it) for those input jpeg files.

Acceptable filenames: 0000000.jpg, 0000001.jpg, 0000002.jpg

Unacceptable filenames: image00001.jpg, file100.jpg, still0001.jpg

You can create numbered outputs in Hybrik using: "file_pattern": "%07d.jpg", in the transcode task.

Special characters in FairPlay SKD

Sometimes, special characters of URLs in Hybrik JSON may cause failures even though those special characters are valid in normal URLs. In this case, you may use ASCII codes in the URLs for the special characters(use https://www.urlencoder.org/ to convert)

For example, Hybrik will fail on a DRM job with this fairplay skd url -- 'skd://fps.ezdrm.com/;000ae000-06ab-41ef-a013-ae533cca5ef8'

script failed: ERROR: missing input file name

/tmp/hybrik/package/19333989_s0-5/hybrik_bento4_v1_5_1mp42hlscc1fd718-235b-6e07-8d85-1353638c0a08.sh: line 3: 000ae000-06ab-41ef-a013-ae533cca5ef8: command not found

The cause is ; in the SKD URL that makes our packager fail. You have to change ; to %3B so it'd be skd://fps.ezdrm.com/%3 B000ae000-06ab-41ef-a013-ae533cca5ef8

Similarly, an ampersand in a URL such as below would cause this error. Replacing & with %26 will fix it.

"fairplay_uri": "skd://test.foo.com/v1/fp?kid=5fda0844993256b29ed12215&contentID=0123456789"

becomes

"fairplay_uri": "skd://test.foo.com/v1/fp?kid=5fda0844993256b29ed12215%26contentID=0123456789"

Error: "unsupported input file, more than one "traf" box in fragment"

This error occurs when "segmentation_mode": "fmp4" is included in the package task, along with "force_original_media": true. Removing the segmentation_mode from the package task is the solution, as long as the content feeding the package task has already been segmented.

Media Info reports "Frame rate mode: Variable"

For h264 codec outputs, you can force x264 to report constant frame rate by including it in the x264_options:

"video": {

"codec": "h264",

"width": 3840,

"height": 2160,

"profile": "high422",

"level": "5.1",

"frame_rate": "23.976",

"chroma_format": "yuv422p10le",

"bitrate_kb": "240000",

"color_primaries": "bt709",

"color_trc": "bt709",

"color_matrix": "bt709",

"exact_gop_frames": 1,

"x264_options": "force-cfr=1" <---------------

},

Video and audio streams in the same container have different durations

It is normal for video and audio streams to have slightly different durations, as the audio stream must be written with complete frames, and the video and audio frame boundaries don’t align within the container.

Video streams have durations calculated based on the frame count divided by the fps rate (e.g. 507/25 = 20.28).

Audio streams have durations calculated based on the number of frames present divided by the frame rate, but audio frame rate is not the same as video - it is sampling rate divided by samples per frame (e.g. 48000/1024 = 46.875). So for audio, the duration is first calculated as 953/46.875 = 20.331, but then the last frame has an adjustment of -8 ms as seen in Media Info “Duration_LastFrame”: -8 ms. With this -8 ms accounted for, the final duration for the audio is 20.323

MediaInfo reports "Format settings, Reference frames: 4 frames"

Format profile : High@L4

Format settings : CABAC / 4 Ref Frames

Format settings, CABAC : Yes

Format settings, CABAC : Yes

Format settings, Reference frames : 4 <----------

Format settings, Reference frames : 4 frames

MediaInfo reports 4 frame when the video settings in a job include specified "refs": 2 or "refs": 3

When you need to enforce a particular reference frame count, you will need to set b_pyramid to 1. If set to 2 (which is the default), x264 will force minimum refs to 4.

Remove "menu" as PID 4096 in MPEG transport streams

If you do not want the menu item to be included in your transport stream output, adding "use_sdt": false to your container key:value set will prevent it:

{

"container": {

"use_sdt": false,

"kind": "mpegts",

"muxrate_kb": 3750

}

}

Error "sys_svc call rejected"

If a task fails, it could be related to credentials or tags

- If you're using Google Cloud Platform (GCP), make sure that you have both your access credentials set correctly and your computing group correctly tagged.

- If using GCP, it is likely that your defaults point to AWS. You need to add these credentials to every

locationand each tag needs to be tagged with a GCP computing group

"payload": {

"storage_provider": "gs",

"url": "{{source}}",

"access": {

"credentials_key": "my-google-compute-access-credentails"

},

...

Error: "Start timestamp greater than end"

"message": "exception in package task",

"details": "Start timestamp greater than end (cue #1368)",

This is typically an issue with a subtitle/caption file. The error tells you which event has a timing error (in this case cue #1368).

Suggestions:

- Check the subtitle/caption file and see if there is a timing error

- If this is an

hlspackagetask failing specifically withvtt, this was seen where the timecode starts with time formatMM:SS.sssfor the VTT time placeholders, and then at the hour mark, it jumps toHH:MM:SS.sss.

Error: "video decode error: too many errors (1) at 12262"

This is usually an error with the source. This error message indicates that Hybrik encountered an error while decoding the source file at frame #12262.

You may increase the tolerance of encoding errors to ignore the error. In this case, Hybrik will repeat the last known good frame when an error is encountered. Add this to the payload of your transcode task.

Error: "Subtitle start and stop time can not be equal"

"message": "exception in core transcode",

"details": "Subtitle start and stop time can not be equal, stop time can not be a zero and start time must be smaller than stop time",

This has been seen when a subtitle source is an SRT file (starts at 0) but the matching video track has a timecode track starting at 1 hour. The srt file ended before the timecode track started.

- Solution: set the

sync_to_timecodein the subtitle source'scontentsarray to false:

"contents": [

{

"kind": "subtitle",

"payload": {

"sync_to_timecode": false,

"format": "srt",

"language": "eng"

}

}

]

Error "ping timeout on first execution"

"result_define": "SYS_TSK_PING_EXPIRED",

"message": "ping timeout on first execution, overdue: 1096, permitted is: 90",

This error indicates a machine has simplied stopped reporting progress. This can occur when running a complex job on an undersized machine instance. During the running of the job, the processing ran out of available memory. Steps to remedy the error are to run the job on a different machine instance type with more cpu and more memory. Also note that if you have increased the transcoding task count for a particular machine group, you can create a scenario where multiple transcode tasks run on the same machine and fight for resources.

Error: "pipeline error: av_interleaved_write_frame(): No space left on device"

Hybrik automatically allocates EBS volumes as needed during the transcode process. Hybrik attempts to make all transcodes by streaming the output directly to cloud storage, but in some file formats (e.g. QuickTime) there are elements of the file that have to be written after the file is complete. In order to do this, Hybrik writes to an attached EBS volume. This error can occur if Hybrik fails to allocate an EBS volume large enough for the target.

Solution:

- Manually specify the space needed (in bytes) for each target with

size_hint

"targets": [

{

"file_pattern": "{file_pattern}.mov",

"size_hint": 300000000000, <--- 300 GB

...

size_hint is specified in bytes:

10000000000== 10GB100000000000== 100 GB300000000000== 300 GB

Error: "pipeline error: av_interleaved_write_frame(): Broken pipe"

CAUSE 1: One cause could be abnormal video resolution such as 484x272.

CAUSE 2: Another cause could be a scc source in conversion to timed text - when events use a combination of:

- the music note character ♪ and

- italics and

- include a line break

Error: "Cue identifier needs to be followed by timestamp (cue #0)"

this error is caused by a VTT source that does not contain cue numbers. It could be fixed by having VTT sources that contain cue numbers.

If VTT sources with cue numbers can not be obtained, you may use this workaround – first convert the vtt to ttml, and then convert the hybrik generated ttml to VTT which would contain cue numbers.

Error "pipeline error: [mp4 @ 0x55a462d40e40] Could not find tag for codec pcm_s16le in stream"

This error is caused by the fact that the mp4 container does not currently doesn't support pcm_s16le audio. If you need pcm_s16le, use mxf or mov.

"track_sync_mode" : "aligned" vs. "track_sync_mode" : "unaligned"

This parameter will tell Hybrik to trim all the output audio to the length of the shortest audio source in the asset_complex, filling in silence for the rest of the duration. For example, in an asset_complex that has an asset_version with a 30second video & audio file as source0 and a 10second audio file as source1, the transcode output will have all 30 seconds of picture, but only 10 seconds of program audio and 20 seconds of silence.

Error: "standard_init_linux.go:211: exec user process caused exec format error"

When running a custom Docker image, you should make sure that the image has been produced for Intel/AMD processors. The error listed here can be causd by an image built for an ARM processor.

--

Error: "Error dplc_correct_init FAILED with error -14"

This error can be caused when trying to do loudness measurement on sources of duration less than 2 minutes. For less than 2 minutes' sources, dialogue intelligence should be set to false.

There is a general rule by ATSC for short-form content: https://www.atsc.org/wp-content/uploads/2021/04/A85-2013.pdf (sections: 5.2.1, 5.2.4)

Save time on analyze task by skipping video decoding

skip_video_decoding allows to save time during an analyze_task by forcing Hybrik to bypass the video decoding. This is applicable when doing an audio-only analysis. Note that if you are doing analyzing deep_properties of the video (e.g. black detection or VMAF), then this option is not applicable.

skip_video_decoding is a Boolean option that is placed in the source_pipeline of the analyze_task.

"uid": "analyze_task",

"kind": "analyze",

"payload": {

"source_pipeline": {

"skip_video_decoding": true <--- placed here

},

"general_properties": {

"enabled": true

},

"deep_properties": {

"audio": [

{

"track_selector": {

"index": 1

},

"dplc": {

"enabled": true,

"regulation_type": "atsc_a85_agile"

}

}

]

}

}

}

853x480 vs 720x480 and the thin green line

When trying to process a source that is encoded to a fixed 853x480, instead of 720x480, transcodes can yield a green vertical line on the right side when trying to make a 720x480 (16:9) output.

To prevent the green line from appearing in the transcode, crop 1 pixel from the right side. This should prevent the green line from appearing in the file. This will only apply the crop to files that are 853pix wide.

"filters": [

{

"include_conditions": [

"source.video.width == 853"

],

"kind": "crop",

"payload": {

"left": 0,

"right": 1,

"top": 0,

"bottom": 0

}

}

]

Error setting option LRA to value -20

This happens when a negative value is given to loudness_lra_lufs such as below:

"filters": [

{

"kind": "normalize",

"payload": {

"kind": "ebur128",

"payload": {

"allow_unprecise_mode": false,

"integrated_lufs": -16.45,

"loudness_lra_lufs": -12.6,

"true_peak_dbfs": -3.5,

"analyzer_track_index": 0

}

}

}

]

The min/max range for setting loudness_lra_lufs is between 1 and 20 for a normalize audio filter. However, the same loudness_lra_lufs would be given a negative value in the analyze task such as below:

{

"uid": "analyze_task",

"kind": "analyze",

"payload": {

"general_properties": {

"enabled": true

},

"deep_properties": {

"audio": [

{

"ebur128": {

"enabled": true,

"scale_meter": 18,

"target_reference": {

"integrated_lufs": -16.45,

"loudness_lra_lufs": -12.6,

"true_peak_dbfs": -3.5

}

}

}

]

}

}

}

Error: Bad file format: when trying to read some .srt subtitle files

This error can be caused by UTF-16 encoding of the SRT. If the SRT is converted from UTF-16 with BOM to UTF-8 with BOM (through a text editor like Notepad++), the subtitle content can be read.

To use a placeholder {source_name} or {source_url} in a notify message after a qc task fails

If a notify task is intended to provide information such as the source file location after a qc task fails, you must have two objects (source & qc_task) in the "from" connection leading "to" the notify task. Example:

{

"from": [

{

"element": "source"

},

{

"element": "qc_task"

}

],

"to": {

"error": [

{

"element": "notify_task"

}

]

}

}

608 or 708 captions don't show up in the target files

This can be caused by sources that already have embedded CCs while trying to have sidecar .scc to be embedded. Using below will resolve the issue:

"contents": [

{

"kind": "video"

},

{

"kind": "closed_caption",

"mode": "disabled"

}

],

Error: "Unsupported number of channels: 8"

This error results from feeding an analyze_task with 8 discreet tracks instead of a single track with either 2 or 6 channels. Use an audio map to create the appropriate number of channels per track before feeding an anaylze_task or in the source_pipeline of the anaylze_task.

Error: "video codec not supported"

The job summary shows the below error message:

"message": "exception in package task",

"details": "ERROR: video codec not supported",

This can be caused by improperly placing a transcoding target such as thumbnail creations in the streaming ladder generation transcode task prior to the package task. All outputs of the ladder transcode task would be passed to package task, but package task wouldn't be able to handle video codec jpeg used in thumbnails. Put the thumbnail creation into a separate task from the encoding ladder. This task would not feed into the package task.

Error: "premature transcode end error: 0xBAAD2682, stream data inactivity"

Sample error:

"message": "exception in core analyze",

"details": "premature transcode end error: 0xBAAD2682, stream data inactivity for 900 seconds",

"diagnostic": "too long (14027), beginning:\n\ntoo long (74859), beginning:\n\n{ fil

The basic issue here is that Hybrik is unable to correctly access the source content. For example, if a source HTTP server reports a content length of 50GB, but the actual source is only 37GB, Hybrik can be stuck trying to get data that doesn't exist.

UID With / ENOENT: no such file or directory

Hybrik incorporates the target uid parameters into the temp directory for some of its processes. When / is present in the target uid, the host instance will see that as a file path that does not exist, causing error: ENOENT: no such file or directory. We may also see this with other characters or spaces.

Example of uid in target with /:

"uid": "vault/F5/1E/F51E5017-4AE2-443B-B40B-1B97BA99CAB1"

Example of error created:

"details": "ENOENT: no such file or directory, open '/tmp/hybrik/transcode/20162637_s0-12/side_cars_0/0/840216816633_Pharma_Bro_de_DE_Feature-DE5CED1A-6B96-4FA3-BCE8-BB8C0A57A101.itt_ger_stitched.ttml_pre_pyc.ttml_itt_profile_vault/F5/1E/F51E5017-4AE2-443B-B40B-1B97BA99CAB1'",

Simply removing the / from the target uid avoids this error

Example of uid in target with /:

"uid": "vaultF51EF51E5017-4AE2-443B-B40B-1B97BA99CAB1"

Error: "Cannot read property 'split' of undefined"

This error is reported when an SRT subtitle source contains an entry with an empty line followed by a line break as shown below in entry 255:

254

00:14:00,840 --> 00:14:03,000

Could all combat-ready squadrons

proceed--?

255

00:14:03,210 --> 00:14:04,660

<-- should not have a line break

It's a new day, people.

Removing the extra line break will resolve the problem.

Error "pipeline error: what(): CRITICAL: CStorageIO::OnRequest core_read error"

Hybrik will report this error if a source file is deleted while it is being processed